Azure Failover Cluster with Shared Disk

Recently MIcrosoft Azure anounched the preview feature “Shared Disk”. The Shared Disk can be attached at two Azure MVs at the same time. The purpose is to create SQL Server Failover Cluster Instances (FCI), Scale-out File Server (SoFS), File Server for General Use (IW workload), Remote Desktop Server User Profile Disk (RDS UPD) and SAP ASCS/SCS. More or less we can have a failover cluster at Azure VMs like haveing an on-premises failover cluster with shared storage.

At this post I will demostrate step-by-step how to create a Failover Cluster in Azure with two Azure VMs and a Shared Disk.

Since this is a preview feature at the time I am wroting this post, we need to sign up for our preview.

Once we have the confiration that the Shared Disk preview is enabled at our Subscription, we can proceed and create our Shared Disk. Currently this can be done only by using an ARM Template, in order to define the “maxShares”: “[parameters(‘maxShares’)]” disk property. This property defines in how VMs can this Disk be attached.

| Disk sizes | maxShares limit |

|---|---|

| P15, P20 | 2 |

| P30, P40, P50 | 5 |

| P60, P70, P80 | 10 |

Other limitations of the preview are:

- Currently only available with premium SSDs.

- Currently only supported in the West Central US region.

- All virtual machines sharing a disk must be deployed in the same proximity placement groups.

- Can only be enabled on data disks, not OS disks.

- Only basic disks can be used with some versions of Windows Server Failover Cluster, for details see

- Failover clustering hardware requirements and storage options.

- ReadOnly host caching is not available for premium SSDs with maxShares>1.

- Availability sets and virtual machine scale sets can only be used with FaultDomainCount set to 1.

- Azure Backup and Azure Site Recovery support is not yet available.

From the Azure Portal search and open the “Template Deployment”

\

\

Select the “Build your own template in the editor”

And paste the Shared Disk Json at the template

The Shared Disk Json:

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"dataDiskName": {

"type": "string",

"defaultValue": "mySharedDisk"

},

"dataDiskSizeGB": {

"type": "int",

"defaultValue": 1024

},

"maxShares": {

"type": "int",

"defaultValue": 2

}

},

"resources": [

{

"type": "Microsoft.Compute/disks",

"name": "[parameters('dataDiskName')]",

"location": "[resourceGroup().location]",

"apiVersion": "2019-07-01",

"sku": {

"name": "Premium_LRS"

},

"properties": {

"creationData": {

"createOption": "Empty"

},

"diskSizeGB": "[parameters('dataDiskSizeGB')]",

"maxShares": "[parameters('maxShares')]"

}

}

]

}

After the Template Deployment is comleted you will see a normal disk at the Resoruce Group.

Create the VMs

Create both VMs like any normal Azure VM creation. I created wto Windows Server 2016 VMs. Both VMs must be added to the same availability set (Fault Domain = 1), in order to add them behing an internal load balancer and they must be added to the same Proximity Placement Group.

To create a Proximity Placement Group, just create a new resource, search for “Proximity Placement Group” and press create. Just provide a name for the PPG and the location, that must be the same as the Disk.

And select it at the VM creation wizard:

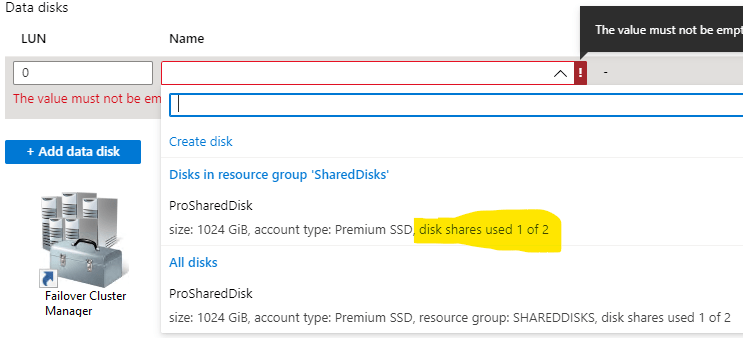

Attach the Sahred Disk to Both VMs

When attaching the Shared Disk to the VMs you will see the “disk shares used” This reports in how many VMs this disk is attached.

Once the VMs are ready we can start the Failover Cluster process. We need to add both VMs to the Domain. Then go tot he Disk Management and bring the disks online, GTP on both servers. The next step is to enable the Failover Clustering feature on both VMs. This can be easily acomplished using the below command:

Install-WindowsFeature -Name Failover-Clustering –IncludeManagementTools –ComputerName SDVM01

Install-WindowsFeature -Name Failover-Clustering –IncludeManagementTools –ComputerName SDVM02

Restart both VMs

Lets start the Failover Cluster Wizard. Select both Azure VMs and validate with running all tests.

The report should have sucess to all Storage Related Stuff. Only the Cluster IP will fail, since it cannot allocate an Azure IP.

So we will not create the Cluster uning the GUI wizard. We will create the cluster unsing the new-cluster command to spesify the cluter IP. But first lets create the Cluster IP Load Balancer.

To enable the Cluster IP we need to create an Internal StandardLoad Balancer with Static IP. Add both VMs to the Backend pool of the load balancer.

And Run this command to one of the two VMs:

New-Cluster -Name cluster1 -Node SDVM01,SDVM02 -StaticAddress 192.168.0.10

The cluster is ready and we can now we can add the Disk to the Cluster

righ click at the Cluster Disk 1 and select “Add to cluster shared volumes”

Wait untill the disk is online

‘

‘

and check the C:\ClusterStorage path for the “Volume1” link.

open the Volume1 and create a file, just to cehck that it is working

To have a more stable cluster, add a Quorum. At the cluster name, right click and select More Actions / Configure Quorum settings.

Since we are on Azure we will configure an Azure Storage account for quorum. Select the “Advanced Configuration”

and select the “Configure CLoud Witness”.

Create an Azure Storage Account

add the Storage Account to the Virtual Network of the VMs, to secure it

And once the Storage Account is ready, copy/paste the name and the Key to the Culster Quorum wizard.

Now we have a full failover cluster

Server 1:

Server 2:

Now we can deploy SQL Server FCI, File Server, etc.. !!!!

More info abuot the Shared Disk: https://docs.microsoft.com/en-us/azure/virtual-machines/windows/disks-shared-enable

Pantelis Apostolidis is a Sr. Specialist, Azure at Microsoft and a former Microsoft Azure MVP. For the last 20 years, Pantelis has been involved to major cloud projects in Greece and abroad, helping companies to adopt and deploy cloud technologies, driving business value. He is entitled to a lot of Microsoft Expert Certifications, demonstrating his proven experience in delivering high quality solutions. He is an author, blogger and he is acting as a spokesperson for conferences, workshops and webinars. He is also an active member of several communities as a moderator in azureheads.gr and autoexec.gr. Follow him on Twitter @papostolidis.

Hello,

We just configured a data disk to be share between 2 VMs. Little did we know all of this was needed (in AWS it is just plug and play).

Do you offer your services to help with enabling the shared disk to be properly accessible by both VMs?

Thank you.

Hello Esteban,

thank you for being so interested in my blog. I don’t work as an individual. You can find many individual professionals on sites like Fiverr, Upwork, and freelancer.

Thank You for this. There is an important item I do not understand. This article shows that the file system is available simultaneously on both nodes for read/write. I thought it was only available in a standard/passive node where only 1 machine could be operational at a time and have access to the shared drive.

Hi Anthony Dolce, you are right, you can write only from the Cluster Owner machine, and this is what this article shows. You can have read operations simultaneously from all machines. So, all machines in the cluster can list and read the shared disk, but only the owner node can write on it. Exactly the same as an on-premises Windows Server failover cluster.

Hello,

Thanks a lot for your post!

I just created two Azure Windows 2022 Core and joined them to the domain. I insalled the Cluster Service on both end. The service doesn’t start:

The Cluster service cannot be started. An attempt to read configuration data from the Windows registry failed with error ‘2’. Please use the Failover Cluster Management snap-in to ensure that this machine is a member of a cluster. If you intend to add this machine to an existing cluster use the Add Node Wizard. Alternatively, if this machine has been configured as a member of a cluster, it will be necessary to restore the missing configuration data that is necessary for the Cluster Service to identify that it is a member of a cluster. Perform a System State Restore of this machine in order to restore the configuration data.

In order to continue we need the service to be started on both nodes?

Thanks,